-

Romain Guesdon authored848aa248

Synthetic Driver Image Generation for Human Pose-Related Tasks

Romain Guesdon,

Carlos Crispim-Junior,

Laure Tougne Rodet

International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISAPP)

Table of content

Overview

This repository contains the materials presented in the paper Synthetic Driver Image Generation for Human Pose-Related Tasks. We provide scripts and guidance to generate your own images along with a link to download our 200k driver images dataset.

Installation

Requirements

Blender

This script has been developed and ran using Blender 3.2.0. Blender can be donwloaded here. The following addons should also be enabled in Edit > Preferences > Add-Ons :

- MHX2 : http://download.tuxfamily.org/makehuman/plugins/mhx-blender-latest.zip

- Import images as planes

- Lighting : Sun position

MakeHuman

Human models were generated using MakeHuman Community. We used a modified skeleton rig that you can find in the mh_models/ directory of this repo. Once MakeHuman is installed, copy the two "blender.*" files in MAKEHUMAN-COMMUNITY-ROOT\makehuman\data\rigs.

We also highly recommand to install MassProduce plugin to generate rapidely several models.

Models

Human models

To generate human models using MakeHuman, first set:

- in Files > Export : Mesh format = Makehuman Exchange (.mhx2), Scale Units = meter

- in Pose / Animate > Skeleton > Rig presets = Blender

Then, you can either mannualy modified your model or use MassProduce in the Community tab after setting all the random parameters, and export it as MHX2.

By default, you should place the mhx2 files in mh_models/exports and the associated texture files in mh_models/exports/textures.

Cars models

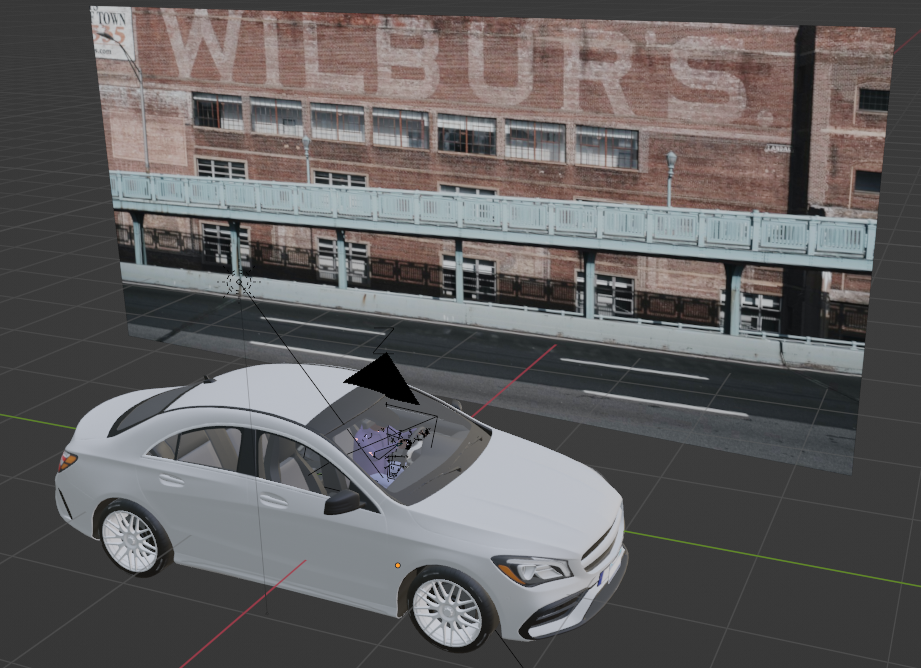

You can use 3D car models you got. Car models should be imported in an empty Blender file, with its location and rotation set to 0 and a real-size scale. The origin of the model should be placed on the driver since it will be used to place the driver. You can set an Empty Arrows object as the parent in Blender to achieve this goal, like below :

Furthermore, you can add empty meshes such as cylinders or cubes to force the hand to be placed inside or outside. To do this, rename them "IN_..." or "OUT_...". We recommand placing a "OUT_" cylinder around the wheel, and a "IN_" box to delimit the inside of the car cockpit.

Finally, you can put target for the wrists that will be used for the manual poses. To do this, place Empty Arrows in the desired location and rotatio, with the name "Target_R_..." or "Target_L_...", such as:

Save each .blend file in a different folder with the required textures, and place these folders in a car_models folder.

Backgrounds

Backgrounds images should be high resolution images of size 1800x800 pix, placed in the backgrounds folder. The image name should start either by "day_..." or "night_...", depending of the time of the day it depicts.

Run

To generate a scene, open the base.blend file in blender. Then, go to the scripting tabs and open global_script.py. Here are the different parameters you might want to change in this file:

- line 15: the absolute path to the cloned repository

- lines 77-84: relative paths to the blend car files, with the object name you will give to the car model in the generated scene

- line 97: path to the human models

- line 186: relative path to the output folder

- line 204: number of different scene (configuration of person, car, bacground) to generate

- line 205: number of pose per scene

Be aware that the last poses will be targeted poses (if you put targets in you car models). Therefore, if you set a number of pose too low, no random pose will be generated.

Then run the script in Blender script. Depending on the number of scene and poses requested, it can take several minutes / hours. During this time, Blender will not be usable.

When the script stops, you should end up with images in the output folder. You can also manipulate the last generated scene in the Layout tab, where each pose corresponds to a time stamp.

Generated dataset

Our dataset with 200k images used in our paper can be downloaded from here.

Citation

If you use our network or our code, please cite:

@InProceedings{Guesdon_2023_Visapp,

author = {Guesdon, Romain and Crispim-Junior, Carlos and Tougne Rodet, Laure},

title = {Synthetic Driver Image Generation for Human Pose-Related Tasks},

booktitle={Proceedings of the 18th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications - To be published,},

year={2023},

pages={},

publisher={SciTePress},

organization={INSTICC},

}Acknowledgments

This work was supported by the Pack Ambition Recherche 2019 funding of the French AURA Region in the context of the AutoBehave project.